Convolutional Neural Networks (CNNs) are a specialized class of deep learning models designed primarily for processing structured grid data, such as images. The architecture of CNNs is inspired by the biological processes of the visual cortex, where individual neurons respond to stimuli in specific regions of the visual field. This design allows CNNs to effectively capture spatial hierarchies in data, making them particularly adept at recognizing patterns and features within images.

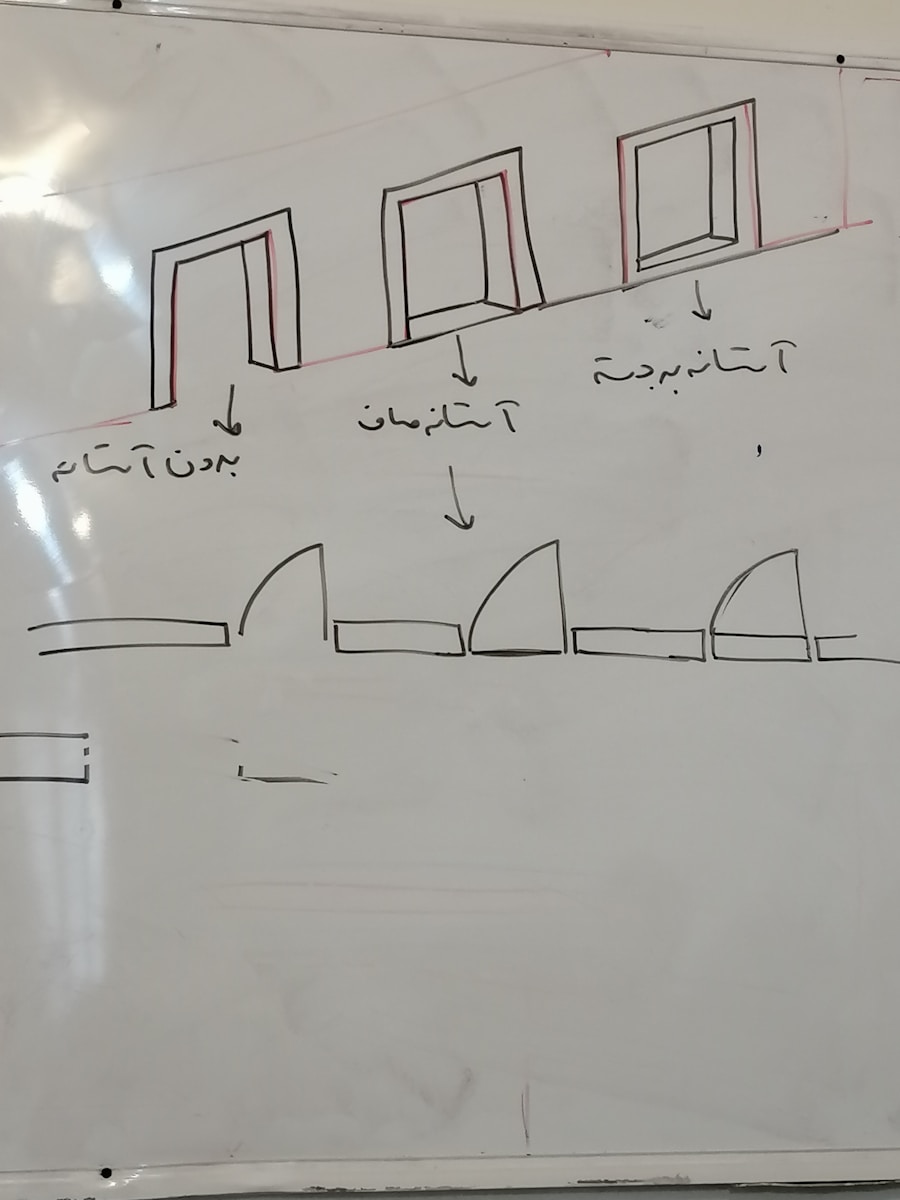

The fundamental building blocks of CNNs include convolutional layers, pooling layers, and fully connected layers, each serving a distinct purpose in the network’s operation. At the core of a CNN is the convolutional layer, which applies a series of filters or kernels to the input data. These filters slide over the input image, performing element-wise multiplications and summing the results to produce feature maps.

This process enables the network to learn local patterns, such as edges and textures, which are crucial for image recognition tasks. As the network deepens, subsequent layers can learn increasingly complex features by combining simpler ones identified in earlier layers. Pooling layers, typically following convolutional layers, reduce the spatial dimensions of the feature maps, thereby decreasing computational load and helping to achieve translational invariance.

This hierarchical feature extraction is what sets CNNs apart from traditional neural networks, allowing them to excel in tasks involving visual data.

Key Takeaways

- Convolutional Neural Networks (CNNs) are a type of deep learning model designed for processing and analyzing visual data, such as images and videos.

- Training CNNs involves feeding input data through a series of convolutional, pooling, and fully connected layers, while adjusting the model’s parameters to minimize the difference between predicted and actual outputs.

- Optimizing CNNs can be achieved through techniques such as regularization, dropout, and batch normalization to improve the model’s generalization and performance.

- CNNs can be applied to image recognition tasks by using pre-trained models, fine-tuning them on specific datasets, and evaluating their performance using metrics like accuracy and precision.

- Leveraging CNNs for object detection involves using techniques like region-based CNNs and single shot multibox detectors to identify and localize objects within images or videos.

Training Convolutional Neural Networks

Training a CNN involves feeding it a large dataset of labeled images and adjusting its parameters to minimize the difference between predicted outputs and actual labels. This process typically employs a technique known as backpropagation, which calculates gradients of the loss function with respect to each parameter in the network. The gradients indicate how much each parameter should be adjusted to reduce the loss, and this adjustment is performed using optimization algorithms such as Stochastic Gradient Descent (SGD) or Adam.

The choice of optimizer can significantly impact the training speed and convergence behavior of the model. Data augmentation is a common strategy employed during training to enhance the diversity of the training dataset without actually collecting new data. Techniques such as rotation, scaling, flipping, and color adjustment can be applied to existing images, allowing the model to generalize better to unseen data.

Additionally, regularization techniques like dropout can be utilized to prevent overfitting by randomly deactivating a subset of neurons during training. This encourages the network to learn more robust features that are not overly reliant on any single neuron or feature map. The combination of these strategies helps ensure that CNNs not only perform well on training data but also maintain their performance on validation and test datasets.

Optimizing Convolutional Neural Networks

Optimizing CNNs involves fine-tuning various hyperparameters and architectural choices to enhance performance on specific tasks. Key hyperparameters include learning rate, batch size, number of epochs, and the architecture itself—such as the number of convolutional layers and filters per layer. The learning rate determines how quickly or slowly a model learns; if set too high, it may overshoot optimal solutions, while a rate that is too low can lead to prolonged training times without significant improvements.

Another critical aspect of optimization is the choice of loss function, which quantifies how well the model’s predictions align with actual labels. For classification tasks, categorical cross-entropy is commonly used, while mean squared error might be more appropriate for regression tasks. Additionally, techniques like learning rate scheduling can dynamically adjust the learning rate during training based on performance metrics, allowing for more efficient convergence.

Hyperparameter tuning can be performed using methods such as grid search or random search, where different combinations are tested to identify the most effective configuration for a given problem.

Applying Convolutional Neural Networks to Image Recognition

| Model | Accuracy | Parameters | Training Time |

|---|---|---|---|

| LeNet-5 | 99.21% | 60,000 | 2 hours |

| AlexNet | 80.7% | 60 million | 5 days |

| VGG-16 | 92.7% | 138 million | 2 weeks |

Image recognition is one of the most prominent applications of CNNs, enabling machines to identify and classify objects within images accurately. The success of CNNs in this domain can be attributed to their ability to learn hierarchical representations of visual data. For instance, in a typical image classification task involving thousands of categories, a CNN can learn to recognize basic shapes in its initial layers and progressively build up to more complex structures like faces or animals in deeper layers.

A notable example of CNNs in image recognition is the ImageNet Large Scale Visual Recognition Challenge (ILSVRC), where models like AlexNet revolutionized the field by achieving unprecedented accuracy rates. AlexNet introduced several innovations, including deeper architectures with more convolutional layers and the use of ReLU activation functions that helped mitigate issues related to vanishing gradients. Subsequent models like VGGNet and ResNet further advanced image recognition capabilities by employing techniques such as skip connections and batch normalization, allowing for even deeper networks without suffering from degradation in performance.

Leveraging Convolutional Neural Networks for Object Detection

Object detection extends beyond simple image classification by not only identifying objects within an image but also localizing them with bounding boxes. This task is inherently more complex as it requires both classification and spatial awareness. CNNs have been pivotal in advancing object detection methodologies through architectures like Faster R-CNN and YOLO (You Only Look Once).

These models utilize region proposal networks (RPN) or single-stage detection approaches to efficiently predict object locations and classes simultaneously. Faster R-CNN employs a two-stage process where it first generates region proposals using a CNN and then classifies these proposals into various object categories while refining their bounding box coordinates. On the other hand, YOLO takes a different approach by treating object detection as a single regression problem, predicting bounding boxes and class probabilities directly from full images in one evaluation.

This results in significantly faster inference times, making YOLO particularly suitable for real-time applications such as video surveillance or autonomous driving.

Harnessing Convolutional Neural Networks for Image Segmentation

Image segmentation is another critical application of CNNs that involves partitioning an image into multiple segments or regions for easier analysis. Unlike object detection, which provides bounding boxes around objects, segmentation aims to classify each pixel in an image into predefined categories. This fine-grained analysis is essential in various fields such as medical imaging, autonomous vehicles, and scene understanding.

One of the most influential architectures for semantic segmentation is U-Net, which was originally designed for biomedical image segmentation tasks. U-Net employs an encoder-decoder structure where the encoder captures context through downsampling while the decoder enables precise localization through upsampling. This architecture allows for detailed segmentation maps that delineate object boundaries effectively.

Another notable approach is Mask R-CNN, which extends Faster R-CNN by adding a branch for predicting segmentation masks on each Region of Interest (RoI), thus providing both object detection and instance segmentation capabilities.

Expanding the Applications of Convolutional Neural Networks

The versatility of CNNs has led to their adoption across various domains beyond traditional image processing tasks. In natural language processing (NLP), for instance, CNNs have been successfully applied to text classification tasks by treating text as a one-dimensional sequence similar to images. By utilizing convolutional layers to extract n-gram features from text data, CNNs can effectively capture local dependencies and contextual information.

In addition to NLP, CNNs are making strides in fields such as audio processing and video analysis. For audio signals, spectrogram representations can be treated similarly to images, allowing CNNs to learn features relevant for tasks like speech recognition or music genre classification. In video analysis, CNNs can be extended into three dimensions (3D CNNs) to capture temporal dynamics alongside spatial features, enabling applications such as action recognition or video summarization.

Future Developments in Convolutional Neural Networks

As research continues to evolve in the field of deep learning, several promising directions are emerging for future developments in CNNs. One area of focus is improving model efficiency through techniques like model pruning and quantization, which aim to reduce the size and computational requirements of CNNs without sacrificing performance. These advancements are particularly crucial for deploying models on edge devices with limited resources.

Another exciting avenue is the integration of CNNs with other types of neural networks, such as recurrent neural networks (RNNs) or transformers, to leverage their strengths in handling sequential data or long-range dependencies. This hybrid approach could lead to more robust models capable of tackling complex tasks that require both spatial and temporal reasoning. Furthermore, ongoing research into unsupervised and self-supervised learning methods holds promise for reducing reliance on large labeled datasets while still achieving high performance across various applications.

The future landscape of CNNs will likely be shaped by these innovations as researchers continue to push the boundaries of what is possible with deep learning technologies. As we move forward, it will be fascinating to observe how these developments influence not only academic research but also practical applications across industries ranging from healthcare to entertainment.

Leave a Reply