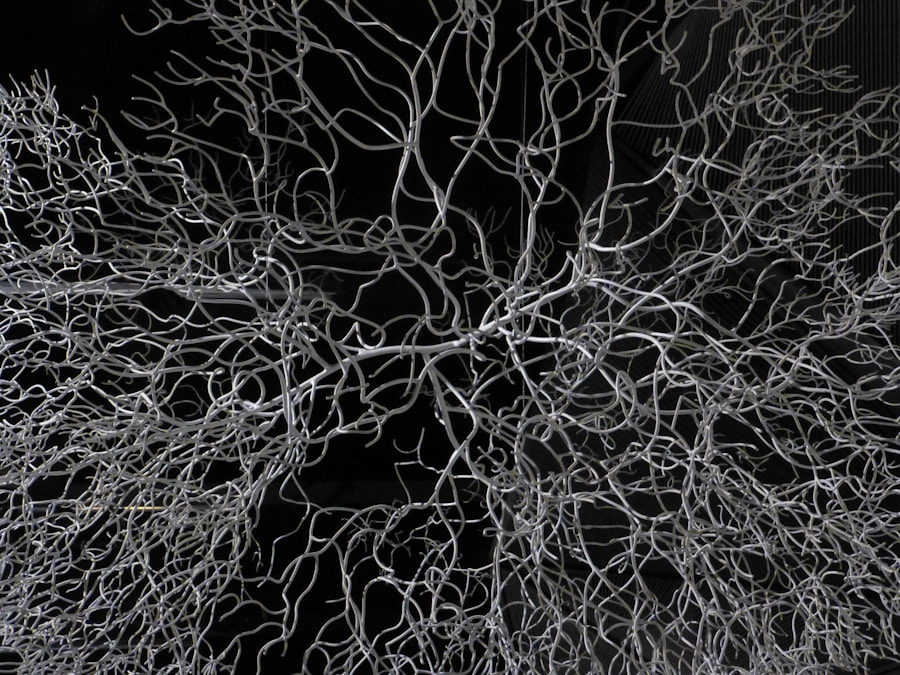

Neural networks are computational models inspired by the human brain’s architecture and functioning. They consist of interconnected nodes, or neurons, organized in layers: an input layer, one or more hidden layers, and an output layer. Each neuron receives input signals, processes them through an activation function, and passes the output to the next layer.

This structure allows neural networks to learn complex patterns and relationships within data, making them particularly effective for tasks such as image recognition, natural language processing, and predictive analytics. The fundamental building block of a neural network is the artificial neuron, which mimics the behavior of biological neurons. Each artificial neuron takes multiple inputs, applies weights to these inputs, sums them up, and then applies a non-linear activation function to produce an output.

The choice of activation function—such as sigmoid, ReLU (Rectified Linear Unit), or tanh—can significantly influence the network’s performance. By adjusting the weights through a process called training, neural networks can learn to approximate functions that map inputs to desired outputs, enabling them to make predictions or classifications based on new data.

Key Takeaways

- Neural networks are a type of machine learning model inspired by the human brain, consisting of interconnected nodes that process and analyze data.

- Training neural networks involves feeding them with labeled data to adjust the weights and biases of the connections between nodes, allowing them to make accurate predictions.

- Optimizing neural networks involves techniques such as regularization, dropout, and batch normalization to improve their performance and prevent overfitting.

- Neural networks can be applied to real-world problems such as image recognition, natural language processing, and financial forecasting, with impressive results.

- Advanced neural network architectures, such as convolutional neural networks and recurrent neural networks, have been developed to tackle specific types of data and tasks with greater efficiency and accuracy.

- Ethical considerations in neural network development include issues of bias, privacy, and accountability, requiring careful consideration and regulation.

- Future directions in neural network research include exploring more efficient training algorithms, developing interpretable models, and integrating neural networks with other technologies such as robotics and healthcare.

- In conclusion, harnessing the potential of neural networks requires a deep understanding of their capabilities and limitations, as well as a commitment to ethical and responsible development and application.

Training Neural Networks

Training a neural network involves adjusting its weights based on a dataset to minimize the difference between the predicted outputs and the actual targets. This process typically employs a method known as backpropagation, which calculates the gradient of the loss function with respect to each weight by applying the chain rule of calculus. The loss function quantifies how well the network’s predictions align with the true values; common examples include mean squared error for regression tasks and cross-entropy loss for classification tasks.

The training process is iterative and often requires multiple epochs, where one epoch represents a complete pass through the entire training dataset. During each epoch, the network’s weights are updated using an optimization algorithm such as stochastic gradient descent (SGD) or Adam. These algorithms adjust the weights in the direction that reduces the loss function, allowing the network to learn from its mistakes.

The learning rate, a hyperparameter that determines the size of weight updates, plays a crucial role in this process; too high a learning rate can lead to divergence, while too low a rate can result in slow convergence.

Optimizing Neural Networks

Optimizing neural networks extends beyond merely adjusting weights during training; it encompasses various strategies aimed at improving performance and efficiency. One critical aspect of optimization is hyperparameter tuning, which involves selecting optimal values for parameters that govern the training process but are not learned from the data itself. These hyperparameters include learning rate, batch size, number of hidden layers, and number of neurons per layer.

Techniques such as grid search or random search can be employed to systematically explore combinations of hyperparameters to identify those that yield the best performance. Another vital optimization technique is regularization, which helps prevent overfitting—a scenario where a model performs well on training data but poorly on unseen data. Regularization methods such as L1 and L2 regularization add penalties to the loss function based on the magnitude of weights, discouraging overly complex models.

Dropout is another popular regularization technique where random neurons are temporarily removed during training, forcing the network to learn more robust features that generalize better to new data. Additionally, techniques like batch normalization can stabilize and accelerate training by normalizing layer inputs, leading to improved convergence rates.

Applying Neural Networks to Real-World Problems

| Real-World Problem | Neural Network Application | Performance Metric |

|---|---|---|

| Medical Diagnosis | Diagnosing diseases from medical images | Accuracy, Sensitivity, Specificity |

| Financial Forecasting | Predicting stock prices and market trends | Mean Absolute Error, Root Mean Squared Error |

| Natural Language Processing | Language translation and sentiment analysis | BLEU score, F1 score |

| Autonomous Vehicles | Recognizing objects and making driving decisions | Intersection over Union, Average Precision |

Neural networks have found applications across diverse fields due to their ability to model complex relationships in data. In healthcare, for instance, convolutional neural networks (CNNs) are employed for medical image analysis, enabling radiologists to detect anomalies in X-rays or MRIs with high accuracy. These networks can be trained on large datasets of labeled images, learning to identify patterns indicative of diseases such as cancer or pneumonia.

The integration of neural networks into diagnostic processes not only enhances accuracy but also reduces the time required for analysis. In finance, neural networks are utilized for credit scoring and fraud detection. By analyzing historical transaction data and customer profiles, these models can identify patterns associated with creditworthiness or fraudulent behavior.

Recurrent neural networks (RNNs), particularly long short-term memory (LSTM) networks, are adept at processing sequential data and are often used for time series forecasting in stock prices or economic indicators. The ability of neural networks to adaptively learn from vast amounts of data makes them invaluable tools in sectors where timely and accurate decision-making is critical.

Exploring Advanced Neural Network Architectures

As research in neural networks progresses, various advanced architectures have emerged that enhance their capabilities and broaden their applicability. Generative adversarial networks (GANs) represent one such innovation; they consist of two competing networks—a generator and a discriminator—that work together to produce realistic synthetic data. GANs have been successfully applied in generating high-quality images, creating art, and even synthesizing realistic human voices.

Transformers are another groundbreaking architecture that has revolutionized natural language processing (NLP). Unlike traditional RNNs that process sequences sequentially, transformers utilize self-attention mechanisms to weigh the importance of different words in a sentence simultaneously. This allows them to capture long-range dependencies more effectively and has led to significant advancements in tasks such as machine translation and text summarization.

Models like BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer) have set new benchmarks in NLP tasks by leveraging large-scale pre-training on diverse text corpora.

Ethical Considerations in Neural Network Development

Bias in AI Systems

One major concern is bias in AI systems; if a neural network is trained on biased data, it may perpetuate or even exacerbate existing inequalities. For example, facial recognition systems have been shown to exhibit racial bias when trained predominantly on images of lighter-skinned individuals.

Importance of Transparency and Accountability

Transparency and accountability are also critical ethical considerations in neural network development. As these models become increasingly complex and opaque, understanding their decision-making processes becomes challenging. This lack of interpretability can lead to mistrust among users and stakeholders.

Towards Explainable AI

Efforts are underway to develop explainable AI techniques that provide insights into how neural networks arrive at specific decisions or predictions. Ensuring that AI systems are transparent not only fosters trust but also enables users to challenge or question decisions made by these systems.

Future Directions in Neural Network Research

The future of neural network research is poised for exciting developments as researchers continue to explore new architectures and methodologies. One promising direction is the integration of neural networks with other machine learning paradigms, such as reinforcement learning (RL). Combining these approaches can lead to more robust models capable of learning from both structured data and dynamic environments.

For instance, RL has been successfully applied in training agents for complex tasks like playing video games or robotic control. Another area ripe for exploration is neuromorphic computing, which aims to design hardware that mimics the brain’s structure and functioning. This approach could lead to more energy-efficient neural networks capable of processing information in real-time while consuming significantly less power than traditional computing architectures.

As we move towards an era where AI systems are embedded in everyday devices—from smartphones to autonomous vehicles—the demand for efficient neural network implementations will only grow.

Harnessing the Potential of Neural Networks

Neural networks represent a transformative technology with vast potential across various domains. Their ability to learn from data and model complex relationships has already led to significant advancements in fields such as healthcare, finance, and natural language processing. As researchers continue to innovate with advanced architectures and optimization techniques, the capabilities of neural networks will expand further.

However, with this potential comes responsibility; ethical considerations must guide the development and deployment of these technologies to ensure they benefit society as a whole. By addressing issues such as bias, transparency, and accountability, we can harness the power of neural networks while fostering trust among users and stakeholders. The future of neural networks holds promise not only for technological advancement but also for creating a more equitable and just society through responsible AI practices.

Leave a Reply