Recurrent Neural Networks (RNNs) represent a significant advancement in the field of artificial intelligence, particularly in the realm of sequence prediction and temporal data processing. Unlike traditional feedforward neural networks, RNNs possess a unique architecture that allows them to maintain a form of memory. This memory is crucial for tasks where context and order are essential, such as language modeling or time series forecasting.

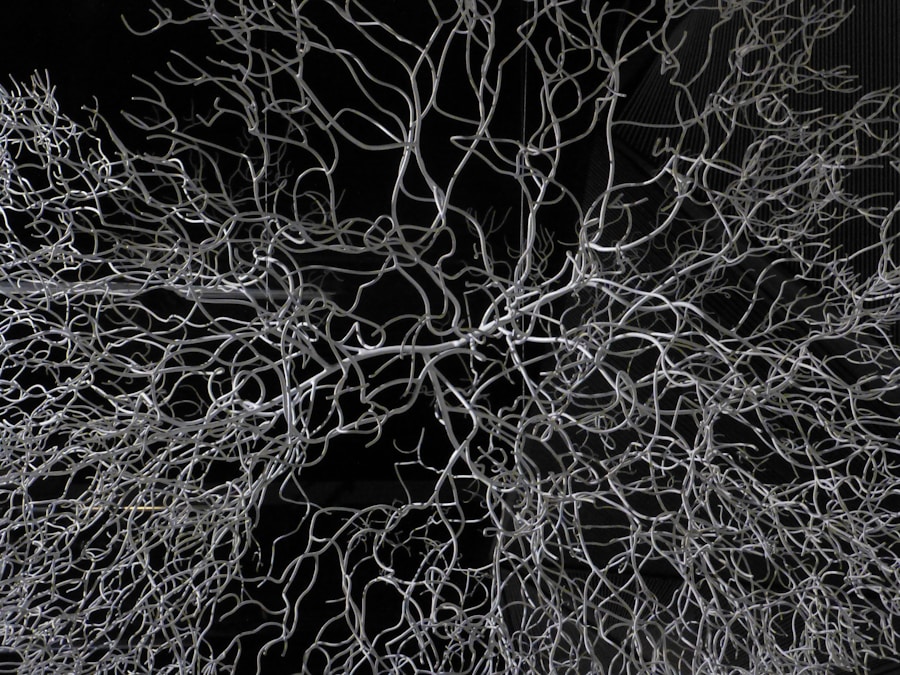

The fundamental mechanism behind RNNs is their ability to take inputs not only from the current time step but also from previous time steps, effectively creating a loop within the network. This looping structure enables RNNs to capture dependencies across sequences, making them particularly adept at handling data where the order of inputs matters. The architecture of RNNs can be visualized as a chain of repeating modules, each containing a neural network layer.

At each time step, the network receives an input and produces an output while also passing its hidden state to the next time step. This hidden state acts as a memory that retains information about previous inputs, allowing the network to learn patterns over time. However, this design also introduces complexities, particularly when it comes to training the network.

The backpropagation through time (BPTT) algorithm is employed to update the weights of the network, but it can lead to issues such as vanishing and exploding gradients, which hinder the learning process for long sequences.

Key Takeaways

- Recurrent Neural Networks (RNNs) are a type of neural network designed to work with sequential data, making them well-suited for tasks like natural language processing and time series analysis.

- RNNs have a wide range of applications, including language translation, speech recognition, sentiment analysis, and stock market prediction.

- Training RNNs can be challenging due to issues like vanishing gradients and long training times, but techniques like gradient clipping and using pre-trained models can help address these issues.

- RNNs have limitations such as difficulty in capturing long-term dependencies and a tendency to forget information from earlier time steps, which can impact their performance on certain tasks.

- Long Short-Term Memory (LSTM) networks are a type of RNN designed to address the vanishing gradient problem and better capture long-term dependencies, leading to improved performance on tasks like language modeling and speech recognition.

Applications of Recurrent Neural Networks

RNNs have found applications across a diverse range of fields, showcasing their versatility and effectiveness in handling sequential data. One of the most prominent applications is in natural language processing (NLP), where RNNs are utilized for tasks such as language translation, sentiment analysis, and text generation. For instance, in machine translation, RNNs can process sentences word by word, maintaining context and understanding the relationships between words over long distances.

This capability allows for more accurate translations that consider the nuances of language rather than treating each word in isolation. Another significant application of RNNs is in speech recognition systems. By analyzing audio signals as sequences of sound waves, RNNs can effectively model the temporal dynamics of speech.

They can learn to recognize patterns in phonemes and words, enabling systems to transcribe spoken language into text with remarkable accuracy. Additionally, RNNs are employed in financial forecasting, where they analyze historical stock prices and economic indicators to predict future trends. By capturing temporal dependencies in financial data, RNNs can provide insights that inform investment strategies and risk management.

Training and Fine-Tuning Recurrent Neural Networks

Training RNNs involves several critical steps that require careful consideration to ensure optimal performance. The first step is data preparation, which includes preprocessing the input sequences to make them suitable for training. This often involves normalizing the data, tokenizing text for NLP tasks, or segmenting time series data into manageable sequences.

Once the data is prepared, it is essential to define the architecture of the RNN, including the number of layers and units per layer. Hyperparameters such as learning rate, batch size, and dropout rates also play a crucial role in determining how well the network learns from the data. Fine-tuning an RNN typically involves adjusting these hyperparameters based on validation performance.

Techniques such as grid search or random search can be employed to systematically explore different combinations of hyperparameters. Additionally, regularization methods like dropout can help prevent overfitting by randomly deactivating neurons during training. Monitoring metrics such as loss and accuracy on both training and validation datasets is vital for assessing the model’s performance and making necessary adjustments.

Furthermore, employing techniques like early stopping can prevent unnecessary training epochs once performance plateaus.

Challenges and Limitations of Recurrent Neural Networks

| Challenges and Limitations | Description |

|---|---|

| Vanishing and Exploding Gradients | Recurrent Neural Networks (RNNs) can suffer from vanishing or exploding gradients during training, which can make it difficult for the model to learn long-term dependencies. |

| Difficulty in Capturing Long-Term Dependencies | RNNs have a hard time capturing long-term dependencies in sequential data, which can lead to poor performance in tasks that require understanding of context over a long range. |

| Difficulty in Capturing Contextual Information | RNNs may struggle to capture contextual information in sequences, especially when dealing with noisy or ambiguous data. |

| Training Time and Computational Cost | Training RNNs can be time-consuming and computationally expensive, especially for large-scale datasets and complex models. |

| Difficulty in Handling Variable-Length Inputs | RNNs are not naturally designed to handle variable-length inputs, which can be a challenge in tasks where the input sequences have varying lengths. |

Despite their strengths, RNNs face several challenges that can impede their effectiveness in certain applications. One of the most significant issues is the vanishing gradient problem, which occurs when gradients become exceedingly small during backpropagation through time. This phenomenon makes it difficult for the network to learn long-range dependencies within sequences, as earlier inputs may have little influence on later outputs.

Consequently, RNNs may struggle with tasks that require understanding context over extended sequences, such as long paragraphs in text or lengthy time series data. Another limitation of traditional RNNs is their computational inefficiency when processing long sequences. The sequential nature of RNNs means that they cannot fully leverage parallel processing capabilities available in modern hardware like GPUs.

Each time step must be processed in order, leading to longer training times compared to other architectures like convolutional neural networks (CNNs) or transformers. Additionally, RNNs can be sensitive to hyperparameter choices; small changes in learning rates or network architecture can lead to significant variations in performance. These challenges have prompted researchers to explore alternative architectures that address these limitations while retaining the strengths of RNNs.

Improving Performance with Long Short-Term Memory (LSTM) Networks

To overcome some of the inherent limitations of traditional RNNs, Long Short-Term Memory (LSTM) networks were introduced as a specialized type of RNN designed to better capture long-range dependencies within sequences. LSTMs incorporate a more complex architecture that includes memory cells and gating mechanisms—specifically input gates, output gates, and forget gates—that regulate the flow of information through the network. This design allows LSTMs to retain relevant information over extended periods while discarding irrelevant data, effectively mitigating the vanishing gradient problem.

The gating mechanisms in LSTMs enable them to learn when to remember or forget information based on the context provided by the input sequence. For example, when processing a sentence for sentiment analysis, an LSTM can prioritize certain words while ignoring others that may not contribute meaningfully to the overall sentiment conveyed. This capability makes LSTMs particularly effective for tasks involving long sequences or complex dependencies, such as language translation or video analysis.

Furthermore, LSTMs have been widely adopted in various applications beyond NLP, including music generation and anomaly detection in time series data.

Implementing Recurrent Neural Networks in Natural Language Processing

The implementation of RNNs in natural language processing has revolutionized how machines understand and generate human language. One common approach is using RNNs for sequence-to-sequence models, which are particularly useful for tasks like machine translation and summarization. In these models, one RNN encodes an input sequence into a fixed-length context vector while another RNN decodes this vector into an output sequence.

This architecture allows for flexible handling of variable-length input and output sequences, making it suitable for diverse NLP applications. Moreover, attention mechanisms have been integrated with RNN architectures to enhance their performance further. Attention allows the model to focus on specific parts of the input sequence when generating each part of the output sequence.

For instance, when translating a sentence from English to French, an attention mechanism enables the model to weigh different words in the source sentence based on their relevance to the current word being generated in French. This results in more accurate translations that better capture nuances and context compared to traditional RNN approaches without attention.

Leveraging Recurrent Neural Networks for Time Series Analysis

RNNs are particularly well-suited for time series analysis due to their ability to model temporal dependencies effectively. In applications such as stock price prediction or weather forecasting, RNNs can analyze historical data points to identify patterns and trends over time. By feeding sequential data into an RNN, analysts can leverage its memory capabilities to make predictions about future values based on past observations.

For instance, consider a scenario where an organization seeks to forecast sales based on historical sales data and external factors like marketing campaigns or seasonal trends. An RNN can be trained on this historical data to learn how these factors influence sales over time. By capturing both short-term fluctuations and long-term trends through its recurrent structure, the RNN can provide valuable insights that inform business strategies and decision-making processes.

Additionally, variations like LSTM networks can further enhance predictive accuracy by addressing issues related to long-range dependencies within time series data.

Future Developments and Trends in Recurrent Neural Networks

As research continues to evolve in the field of artificial intelligence, several trends are emerging that may shape the future development of recurrent neural networks. One notable trend is the integration of RNNs with other architectures such as transformers and convolutional neural networks (CNNs). This hybrid approach aims to combine the strengths of different models while mitigating their weaknesses—transformers excel at capturing global dependencies through self-attention mechanisms while CNNs are efficient at processing spatial hierarchies.

Another area of exploration involves improving training techniques for RNNs through advancements in optimization algorithms and regularization methods. Techniques such as adaptive learning rates and advanced dropout strategies are being investigated to enhance convergence speed and model robustness during training. Furthermore, there is growing interest in unsupervised learning approaches that allow RNNs to learn from unlabelled data—this could significantly expand their applicability across various domains where labeled datasets are scarce.

In addition to these technical advancements, ethical considerations surrounding AI deployment are becoming increasingly important. As RNNs are applied in sensitive areas such as healthcare or finance, ensuring transparency and fairness in their decision-making processes will be paramount. Researchers are actively exploring methods for interpreting RNN outputs and understanding how these models arrive at specific predictions or classifications.

Overall, recurrent neural networks continue to be a vital area of research within artificial intelligence, with ongoing developments promising to enhance their capabilities and broaden their applications across diverse fields.

Leave a Reply